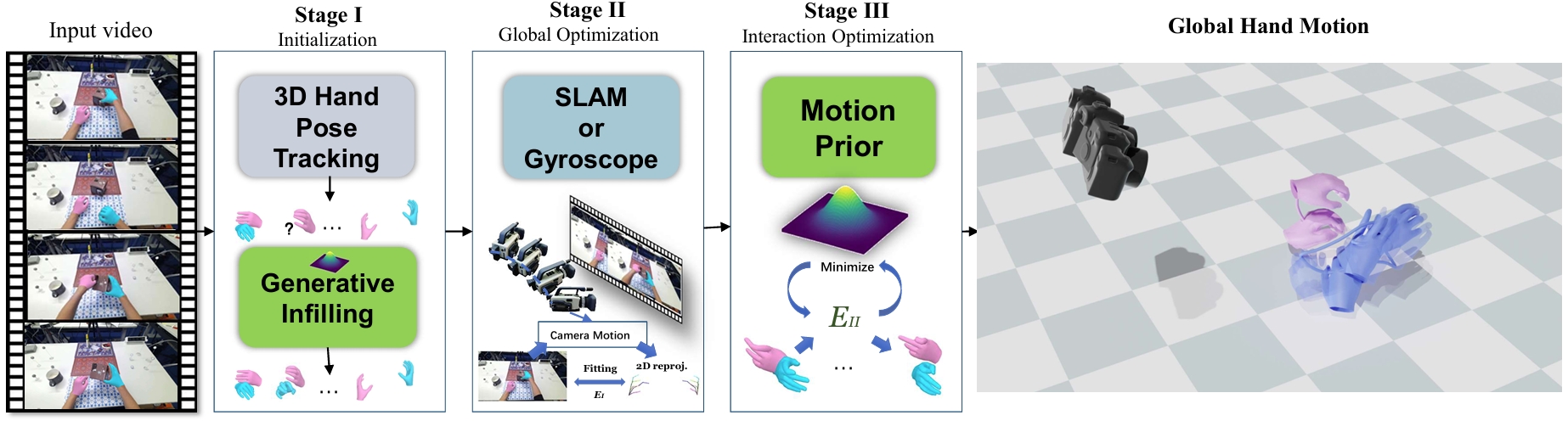

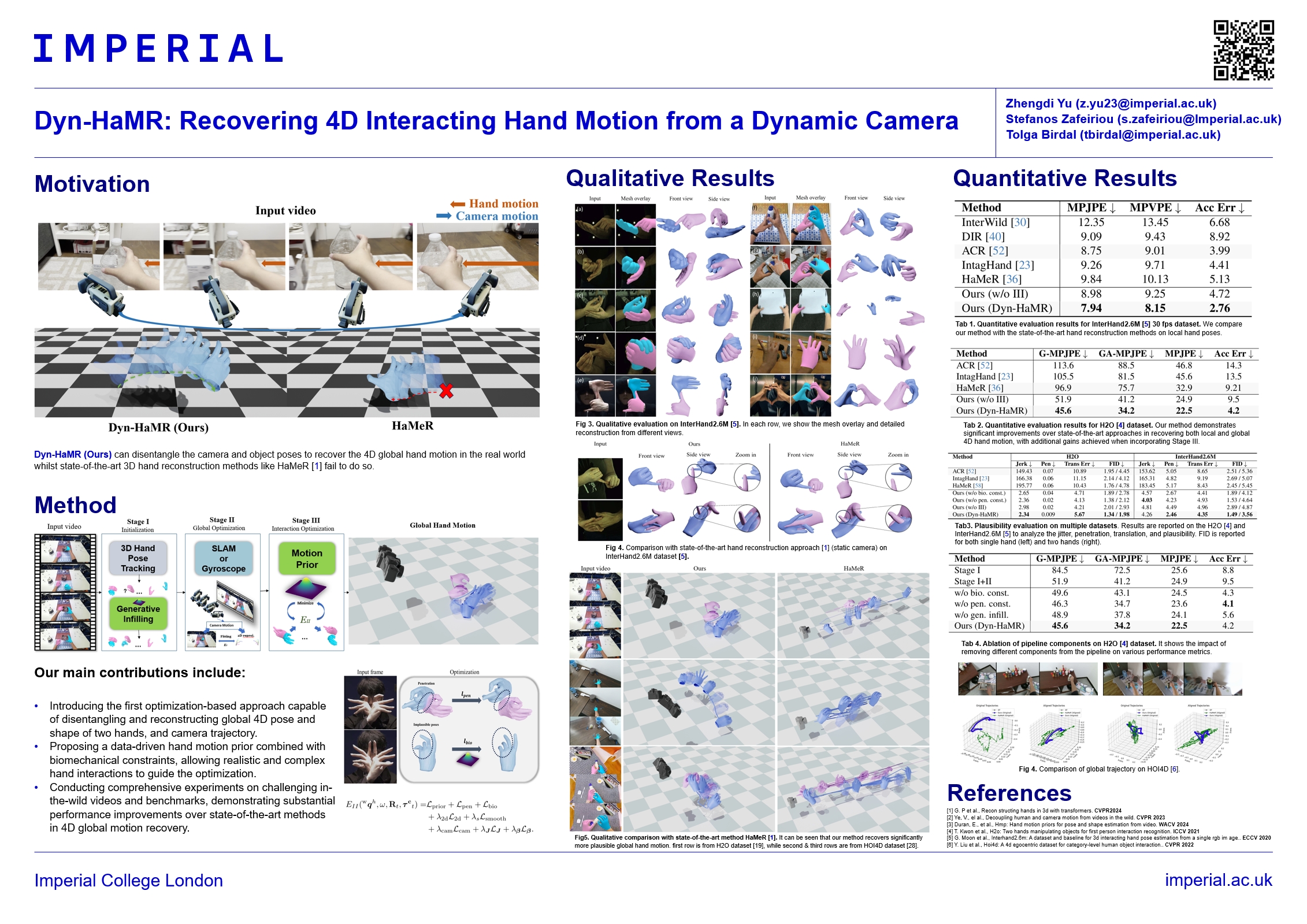

Pipeline Overview

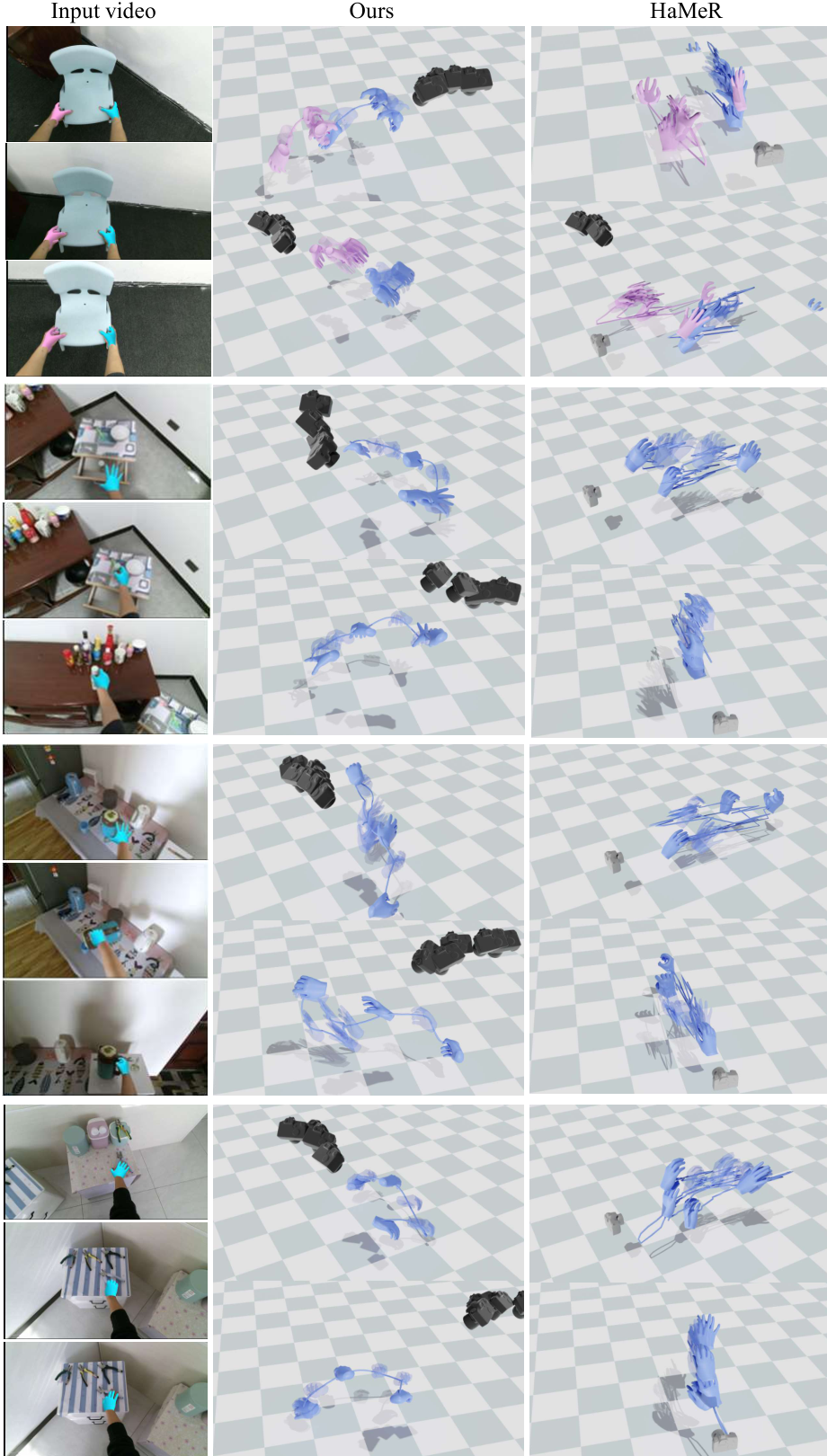

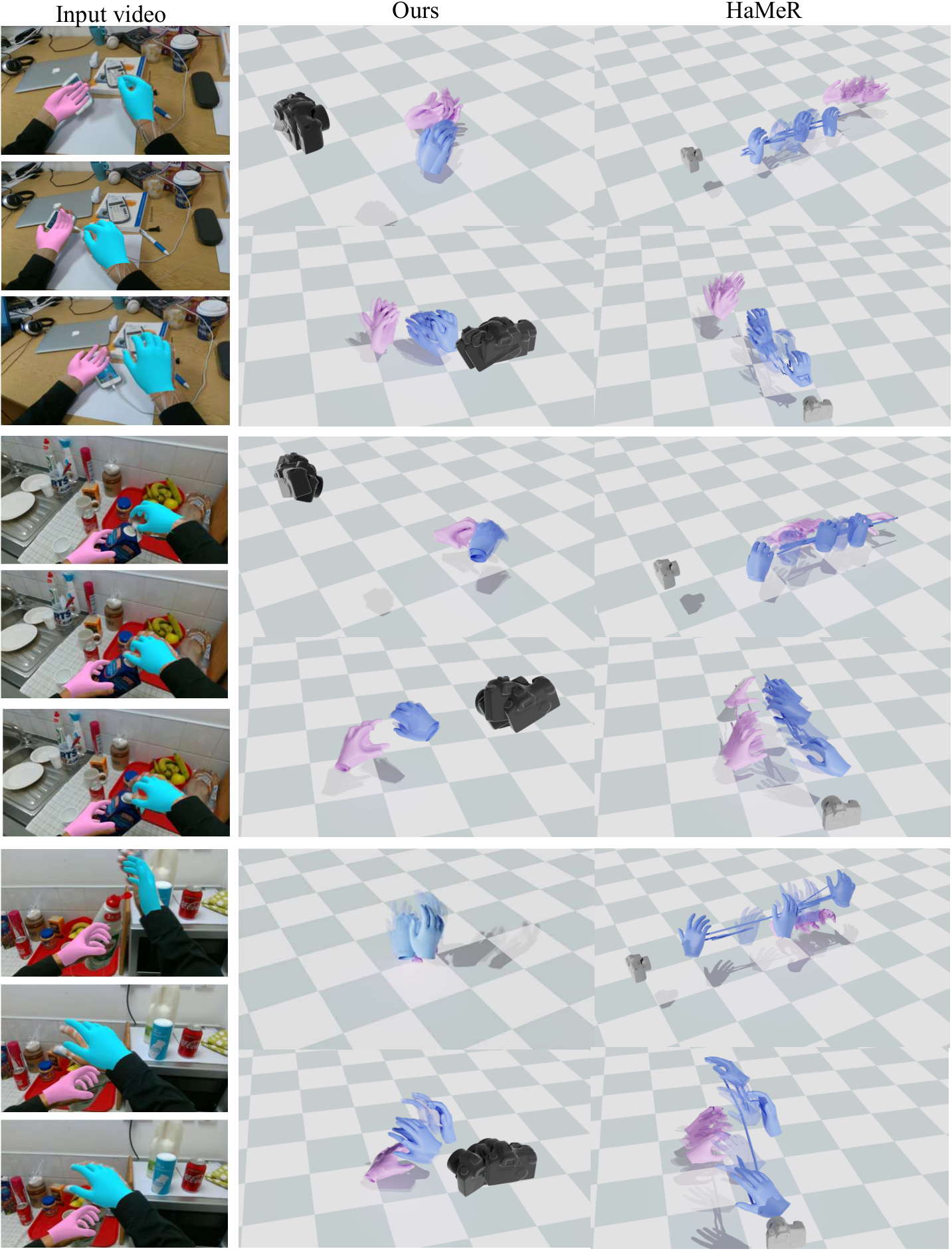

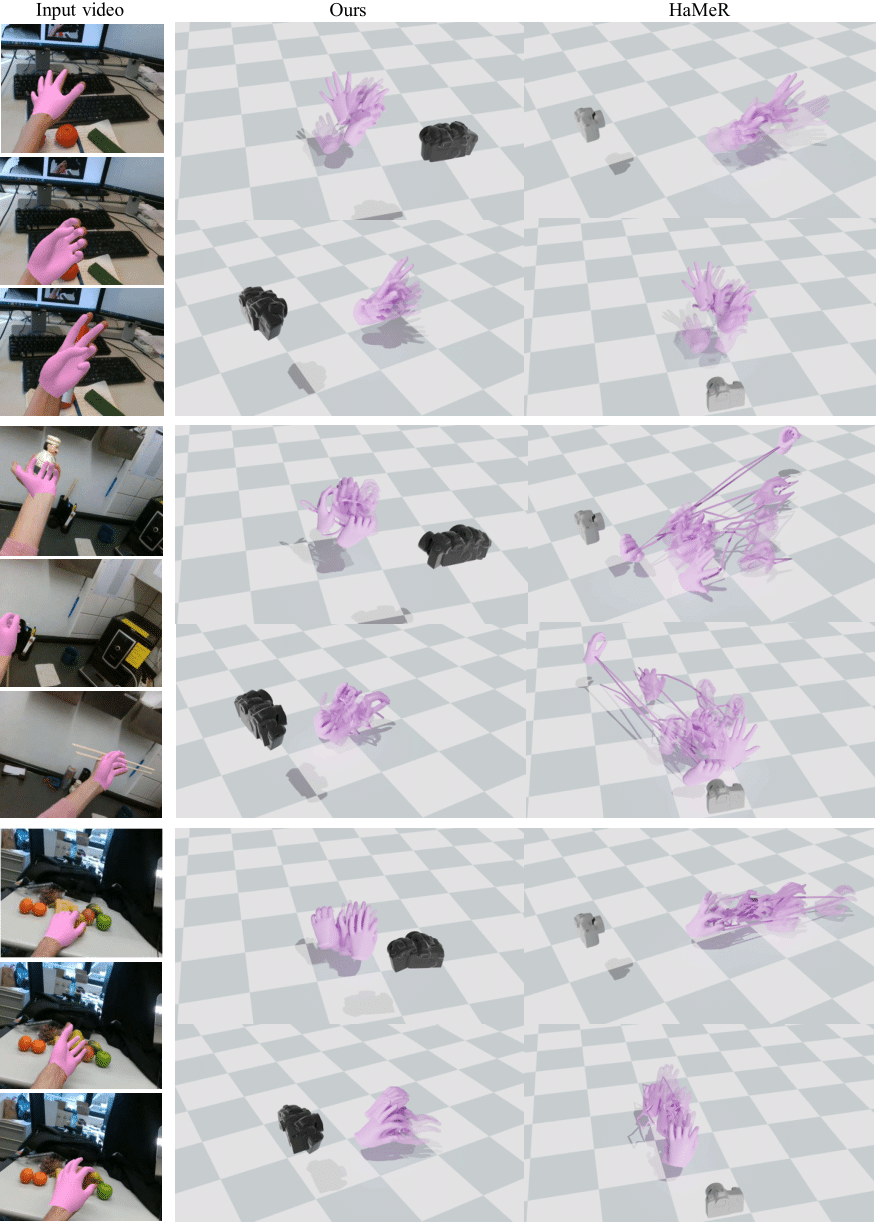

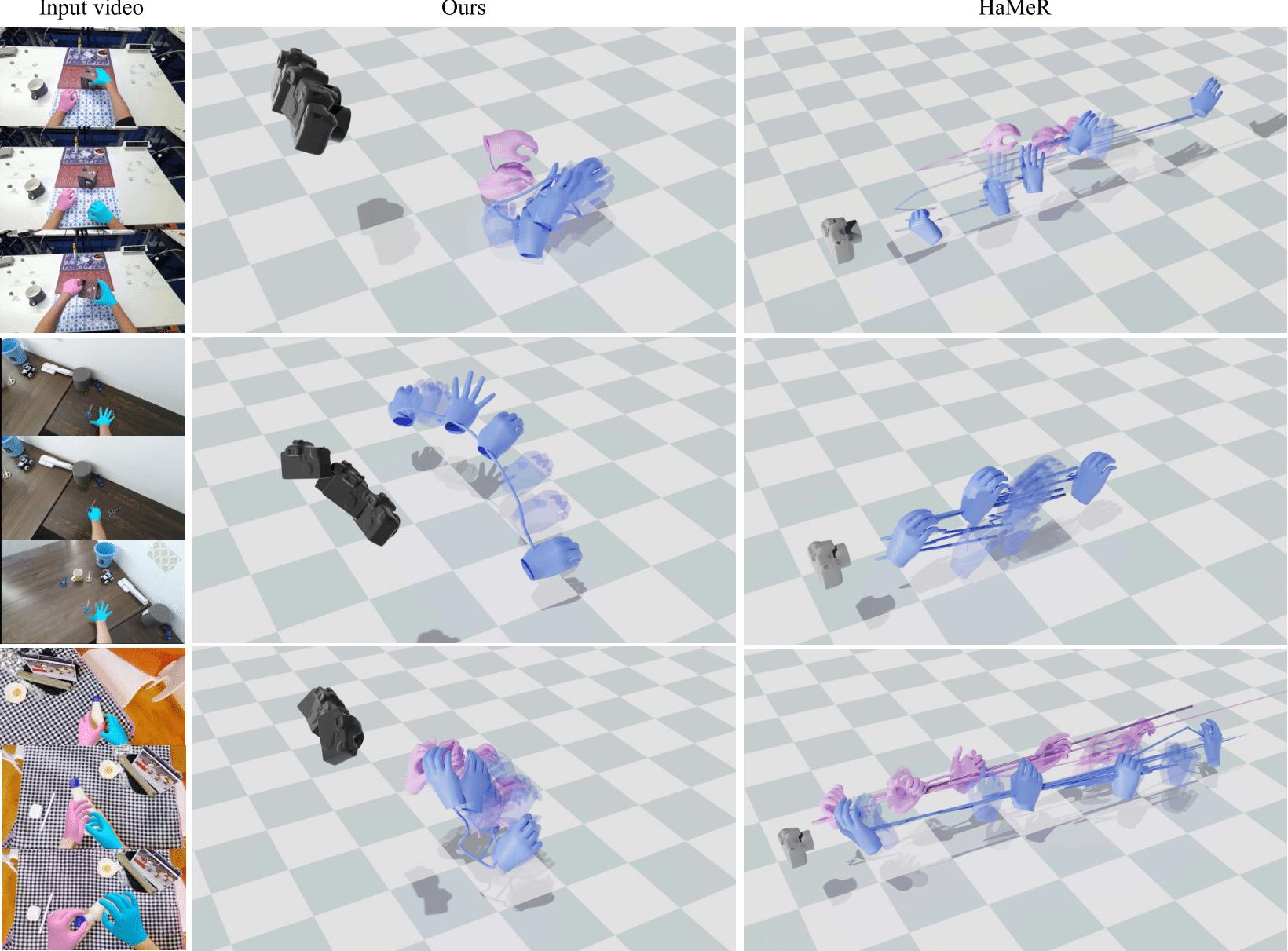

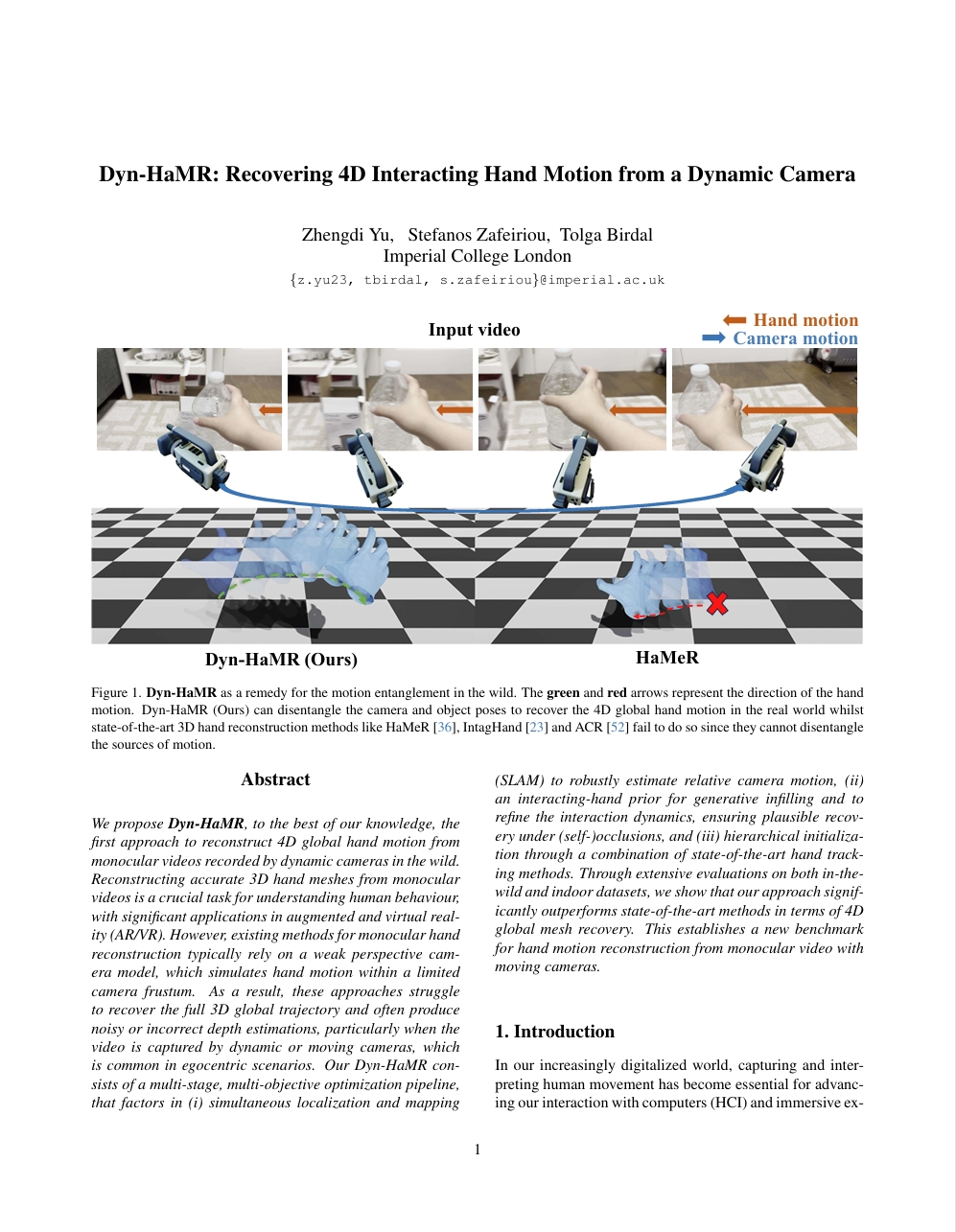

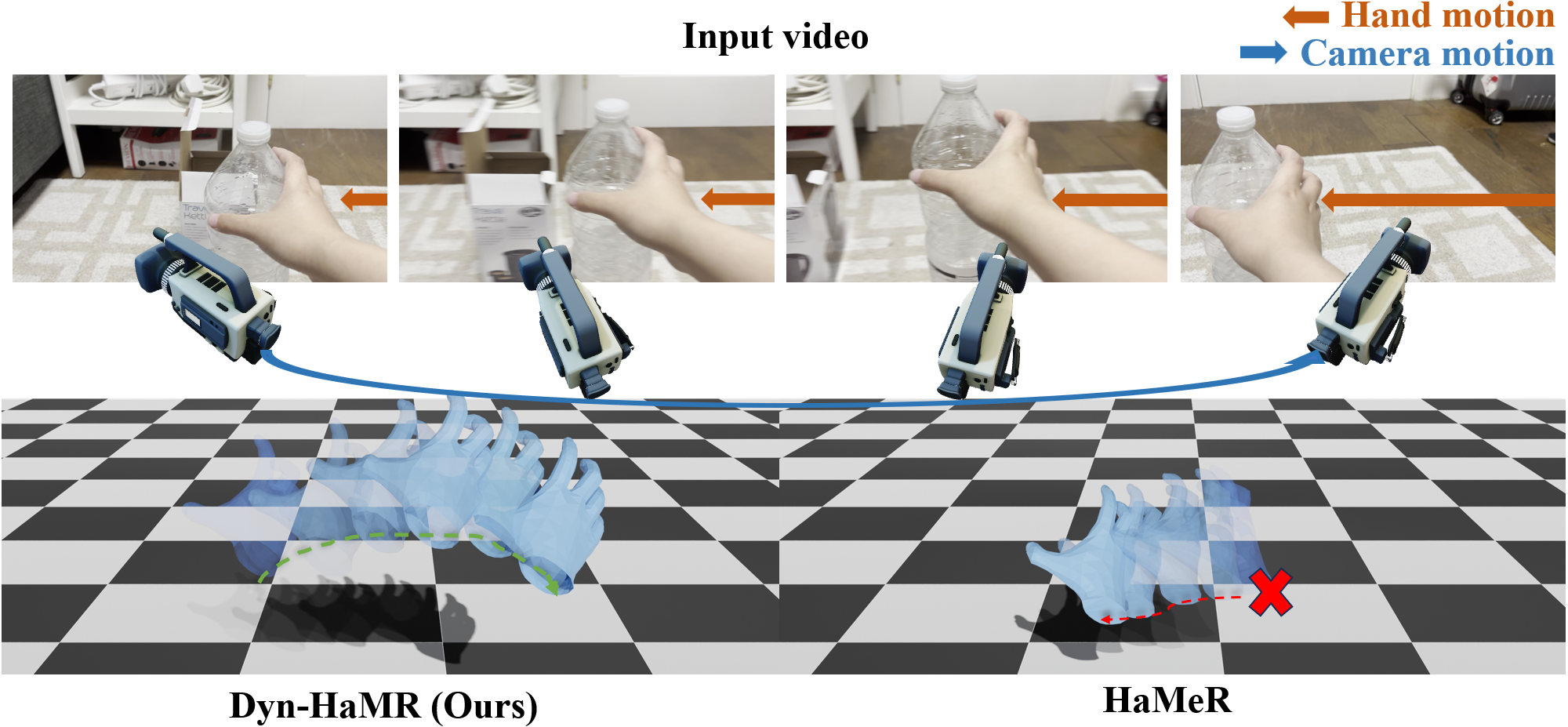

Dyn-HaMR as a remedy for the motion entanglement in the wild. The green and red arrows represent the direction of the hand motion. Dyn-HaMR (Ours) can disentangle the camera and object poses to recover the 4D global hand motion in the real world whilst state-of-the-art 3D hand reconstruction methods like HaMeR, IntagHand, and ACR fail to do so since they cannot disentangle the sources of motion.

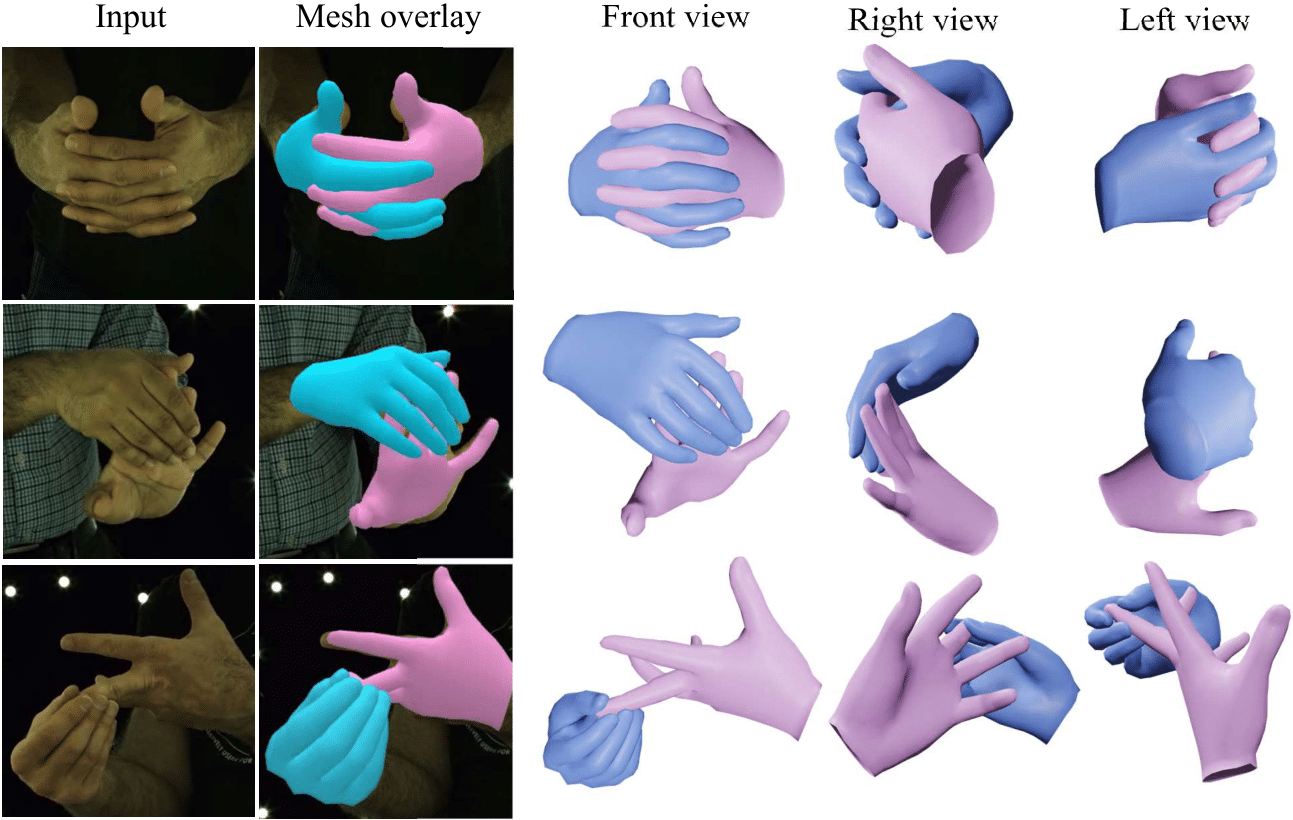

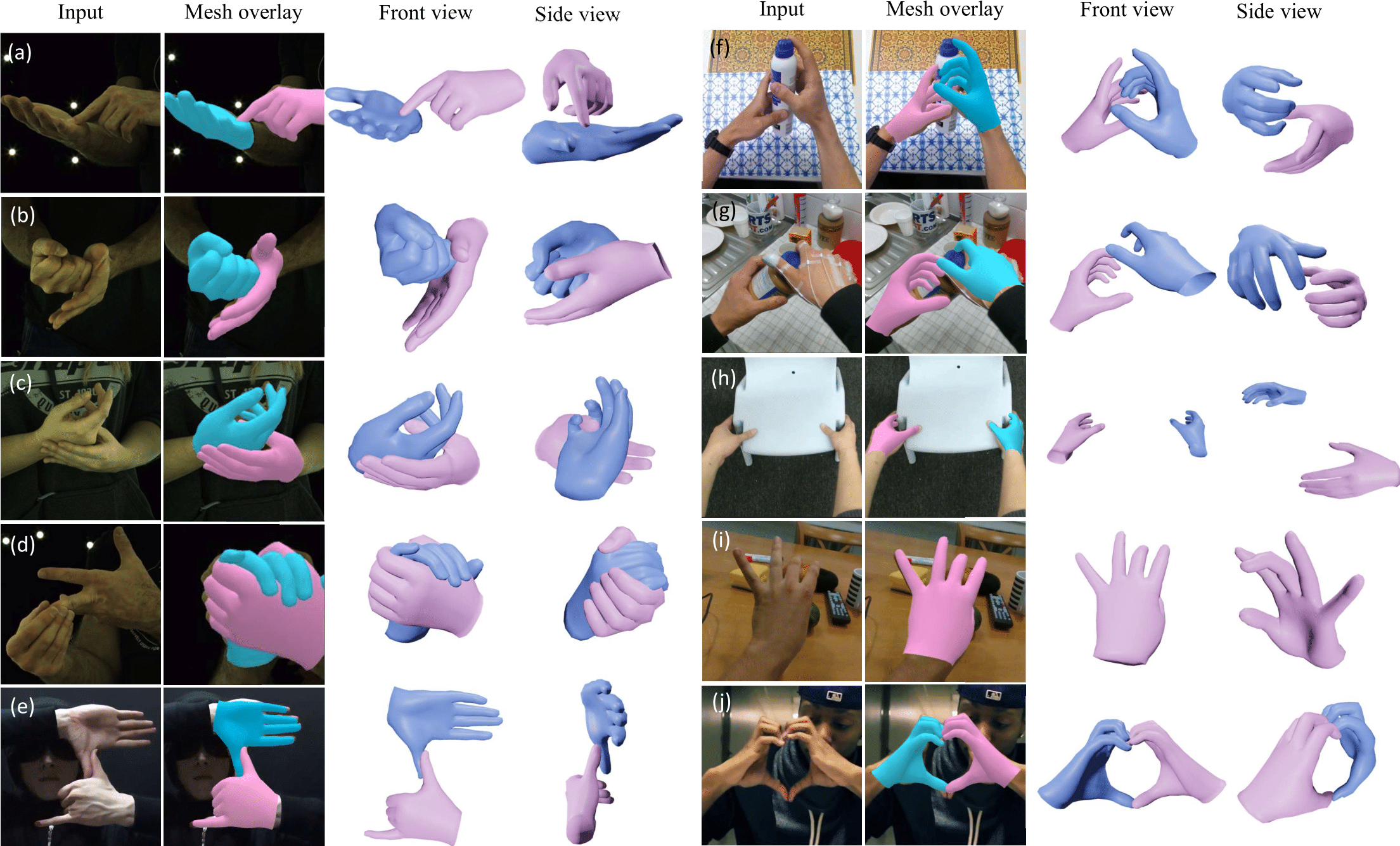

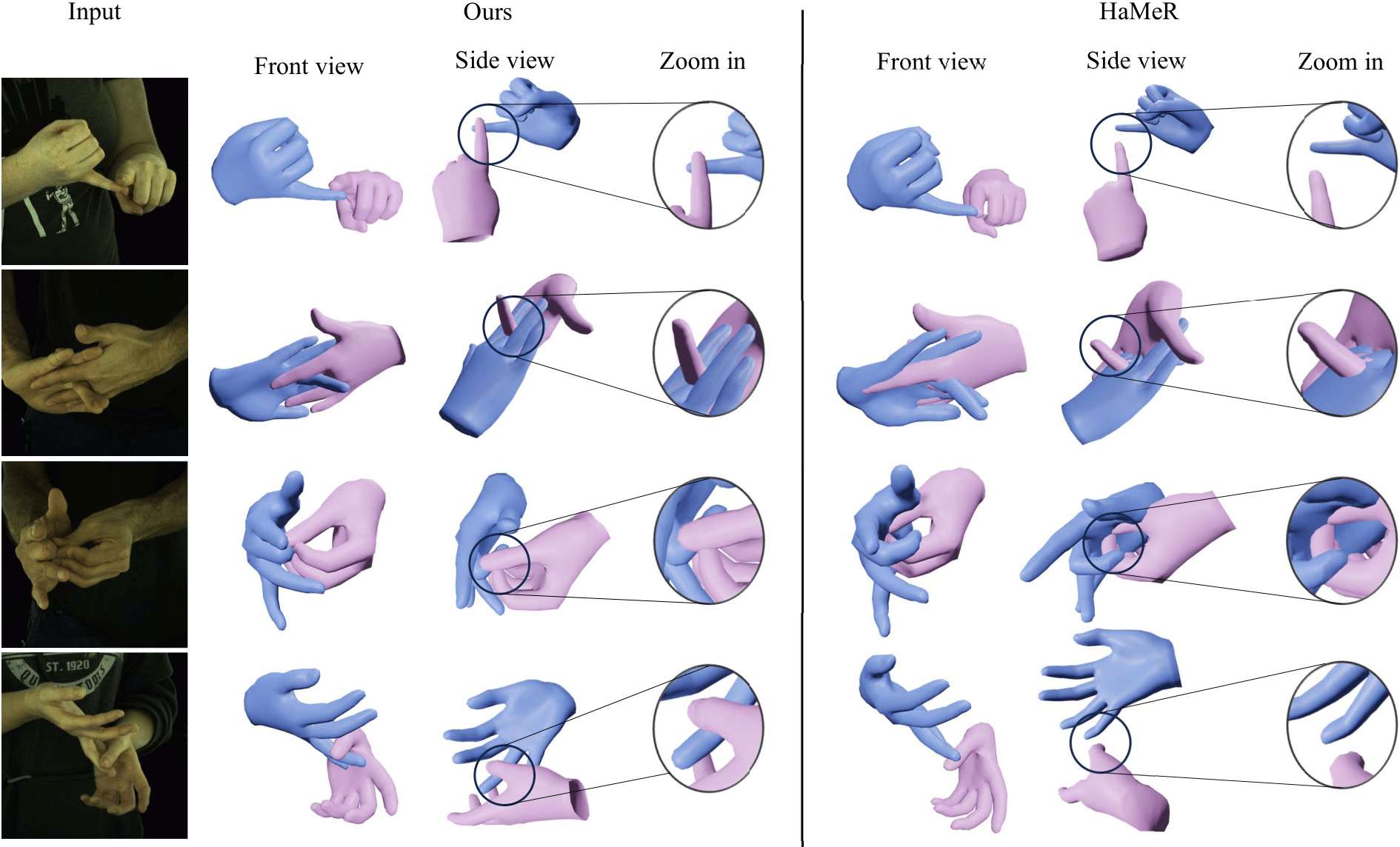

Dyn-HaMR is a three-stage optimization pipeline to recover the 4D global hand motion from in-the-wild videos even with dynamic cameras. Our method can disentangle hand and camera motion as well as modelling complex hand interactions.